In a previous post, we covered the basics of Three.js and the concept of 3D on the web. If you have not already read that, you should.

Some real-world examples of 3D on the web were shown in that post. Now, we will just try to get comfortable with building such experiences.

I will start with the hello world of 3D design, which is making cubes. We start by setting up a rendering engine and the width it should take up on the screen. The WebGLRenderer is used for this. It is then given a canvas size to take up in the browser and added into the DOM.

// WebGL Rendering Engine

const renderer = new THREE.WebGLRenderer();

renderer.setSize(ww, wh);

document.body.appendChild(renderer.domElement);

Note: The variable ww represents window.innerWidth and wh represents window.innerHeight

In the 3D context, everything dwells in a scene which gets created next.

// New Scene

const scene = new THREE.Scene();

This template was created in the order of setting up an actual drama theater, so we need the cameras before anything else after the scene.

// Perspective Camera

const camera = new THREE.PerspectiveCamera(45, ww/wh, 0.1, 150);

// Position x,y,z axis of camera

camera.position.set(0, 0, 5);

The 3D models as the main characters are made up of a geometry and material and are then added to the scene.

// create the mesh from geometry and material

/* const mesh = new THREE.Mesh(geometry, material); */

// adding objects to the scene

/* scene.add(mesh) */

The code in this section is commented out because the geometry and material have not been created. Hence, a mesh cannot exist and be added to the scene. I will get to geometries and materials in a bit.

The last part of the template is where the entire scene gets rendered to display.

// render the scene

const render = () => {

requestAnimationFrame(render);

renderer.render(scene, camera);

};

render();

with a rendering engine set up and stored in the renderer variable, that last part could simply have been

renderer.render(scene, camera);

but we have chosen to create a form of recursion with requestAnimationFrame, because it makes it easy to reposition the camera in every 60FPS, creating an animated scene.

Consider this example

To produce the animated spin, the Y-axis positioning of the camera is incremented every 60FPS.

const render = () => {

requestAnimationFrame(render);

cube.rotation.y += 0.05;

renderer.render(scene, camera);

};

The demo uses a BoxGeometry with a MeshBasicMaterial.

THREE.js has several geometries you can build models out of. The primitive shapes can be joined or modified to make much more complex shapes. There are plain Geometries and BufferGeometries, which are more efficient but limiting.

Materials

Materials make up the color and texture of the geometry. The material used can affect the impact of light on the model. MeshBasicMaterial does not get affected by light and does not need light, which is why that demo contains no light.

There are several other materials in the THREE documentation, but I will demonstrate the MeshLambertMaterial, MeshNormalMaterial, MeshPhongMaterial, which I think you would often need.

The MeshStandardMaterial is popular for creating realistic models, but it comes with a computational cost. The MeshPhongMaterial can be a performant substitute for it.

MeshLambertMaterial

The LambertMaterial is based on the reflection from a light source. Without a light, the scene appears dark and void. The demo uses the DirectionLight.

MeshNormalMaterial

NormalMaterial uses RGB colors to fill the geometry. It requires no light for display, and it is not affected by the presence of light.

MeshPhongMaterial

Want something different from the dark scene background? You can set a different background scene color with:

scene.background = new THREE.Color(0x00ff00);

or you could set a fancy CSS background and enable alpha rendering.

const renderer = new THREE.WebGLRenderer({ alpha: true });

With alpha: true parameter on the WebGLRenderer, the CSS background color is shown instead. The demo above also introduces an earth texture on a SphereGeometry.

This is achieved with TextureLoader, and it is a typical example of how we can start applying 3D to fit right into our user's web experience.

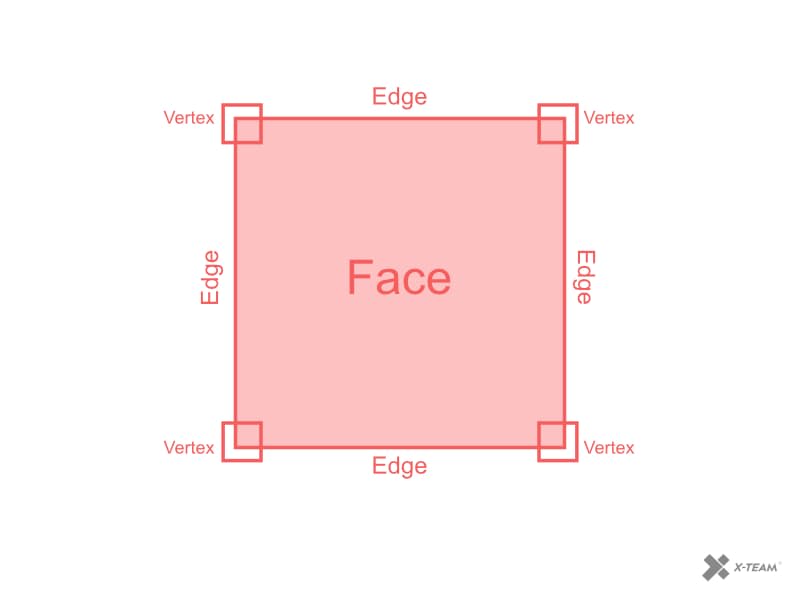

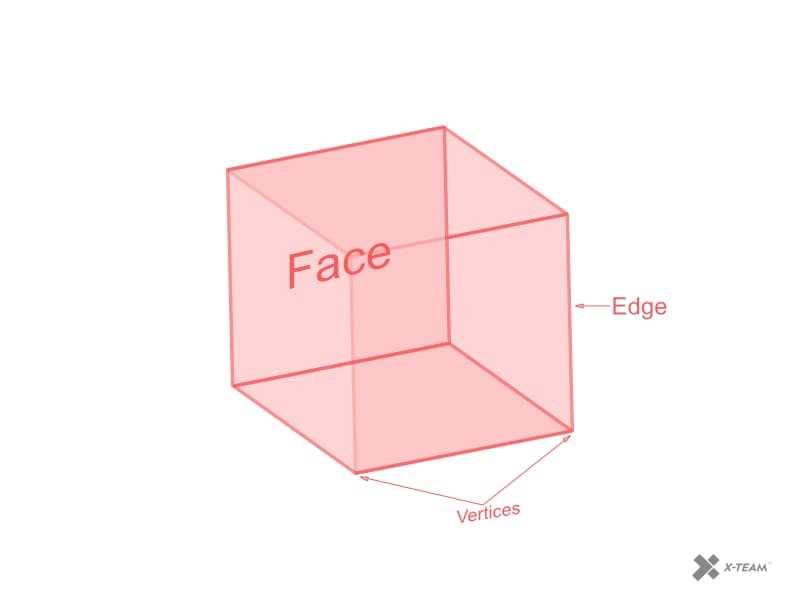

Vertices, Edges, and Faces

Complex art forms are usually made out of primitive shapes. This is true in applications like Photoshop, Illustrator, and the same applies to the 3D space.

A vertex is a point where two or more lines or edges meet to form an angle. In 3D context (unless you are using the THREE.js ShapeGeometry), a vertex is made out of 3 or more edges. It is pluralized as vertices.

An edge joins 2 vertices and is shared by 2 faces.

That obviously gives away what a face is. A face is the flat surface that is bounded by edges in a 3D object.

To build complex geometries, we may modify these parts of a primitive shape to make something completely new.

An instantiated geometry object will have methods for vertices and faces (This does not apply to BufferGeometries).

When not modifying these properties/methods of a geometry, it is more performant to use a BufferGeometry.

These methods contain an array of the vertices and faces that can be modified as shown:

const geometry = new THREE.BoxGeometry(20, 20, 20);

geometry.vertices.map((vertex) => {

vertex.x += Math.random() * 10;

});

As shown in the figure above, the array for a box will contain 8 vertices, and when each of them is changed to a random value you get this:

The x, y, z keys of the vertex objects get a new value each time the program is run. Here is a more complex example of modifying vertices

Another way to create shapes would be to mix different primitive shapes or stack one set of primitive shape over each other like the Christmas tree below:

Tip: The tree above can be controlled with a mouse or touch interaction. This is explained further below

Creating shapes in code could get really complex and tedious. This is where we take advantage of the loaders available in THREE.js. Working at an agency, you may have 3D design experts working in any 3D software like Maya, Blender, Cinema 4D. There are different loaders to work with files from such software. The OBJLoader (object loader), GLTFLoader, ColladaLoader are some examples of loaders that can be used to build from existing models. Here is a list of available loaders in THREE.js.

If you use Blender, there is a blender to THREE.js extension that lets you export models to JSON and import to your code with the JSONLoader.

Here is an example banana using the OBJLoader

Adding Controls

3D rendering on the web will often need interactions. You would sometimes want to give users control over the camera movement in the scene with keys, mouse movement, or touch. This is especially useful in product exhibition. Like with this bike helmet

THREE.js has various controls, including one for VR. The OrbitControls was used in the tree demo above, and it supports mouse and touch interactions. TrackballControls and DragControls share some similarities with the OrbitControls in that they are applicable in web experiences.

// OrbitControls

const orbit = new THREE.OrbitControls(camera);

// TrackballControls

const trackball = new THREE.TrackballControls(camera);

// DragControls

const drag = new THREE.DragControls(camera);

Final Thoughts

3D interactions on the web provide a way to create great experiences for users. When used with virtual reality, they simulate a real-world experience for users. WebGL can also help in building amazing games for the web platform. We have taken most of the software from the desktop to the web; more games can go on the web and be global — That will make some Mac users happy about games currently available on Windows only.

TABLE OF CONTENTS