DevOps is a mindset that encourages communication, collaboration, integration, and automation between software development teams and IT operations teams. Its aim is to remove the silos between teams and at the very least reduce, if not completely eliminate, the amount of finger-pointing when something goes wrong.

DevOps has become an extremely popular way of developing software and is used by companies such as Amazon, Netflix, Etsy, Pinterest, and Google. The DevOps mindset significantly improves the quality and speed of software releases, fixes, features, and updates.

Although each company will organize DevOps somewhat differently, there are two main levels of the DevOps delivery chain: Continuous Integration (CI) and Continuous Delivery (CD).

Continuous Integration means frequently integrating newly developed code with the main body of code that's set to be released. At the very minimum, this involves checking in code, compiling it, and running some basic validation testing.

If you don't do this, the different branches of code that developers are working on will start diverging quite drastically from the master branch. Integrating all the separate branches back into the master branch then becomes an extremely laborious process. CI avoids this from happening.

Continuous Delivery sits on top of CI. Depending on how good your automated testing is, it means your code is always either nearly or entirely ready to deploy. A select few companies have such a fine-tuned DevOps process that newly created code gets pushed out to production with hardly any human intervention, although usually only to a small percentage of their total user base, with an automated feedback loop to monitor quality and usage.

Interviewing a DevOps Engineer at X-Team

Lucas Clavero is a DevOps engineer at X-Team. I sent him a few questions to better understand what got him into DevOps, what some of the guiding principles of DevOps are, and what his DevOps stack looks like.

Hi Lucas! Thank you for taking the time to talk to me. Let me begin by asking how and why you decided on DevOps as the focus of your career?

Ever since I was a kid I've been really passionate about technology, so my first job was as an IT technician for a big company. I took my first steps back then, learning basic concepts of infrastructure and Linux environments. I decided that my next job should be as a SysAdmin. I was at college at that time improving my coding skills and I realized that writing code was something I wanted to add to my career, so I started to hear about the concept of DevOps (it was almost 10 years ago and DevOps was just being introduced). It was the perfect balance between coding and working with infrastructure what caught my attention, and I've been working as a DevOps engineer since then.

The perfect combination. But DevOps is quite a broad term. Are there areas in DevOps that you focus on more than others?

In most projects, you're likely to be the only DevOps engineer, so dev teams are probably going to rely on you with infrastructure-related stuff. That's why I focus a little bit more on CI/CD, architecture and scalability of cloud environments. Also, one of the backbones for a DevOps engineer must be automation; if you have to do the same task twice, then it has to be automated.

Automation seems to be a guiding principle of DevOps. What are the other most important guiding principles that companies need to stick to when going DevOps?

You need to focus on efficiency. If you're going to deploy changes to your applications every day, you need a good solution to avoid extra work from developers. In order to do that, you'll need a good and automated CI/CD setup. Then you'll need to take care of the availability of their applications, always looking to optimize infrastructure utilization. That's something you can achieve by setting auto-scaling policies.

Once you have a continuous availability plan, you'll need to monitor the behavior of your infrastructure using DevOps tools, which can work in both reactive and proactive modes and which offer continuous visibility of the state of resources in order to take corrective actions. Finally, you need to always take care of the security of your infrastructure, use load balancers to distribute incoming application traffic and use firewalls or security policies to avoid public access to your cloud.

Sounds complex. What's the current DevOps stack you're using right now to enable all this?

For AWS, I'm using CodeBuild and CodePipeline for CI, both of which are deployed inside ECS clusters, so I'm using Docker as well. I've recently configured an ELK stack, to centralize all logging. And I'm about to add a Grafana + Prometheus setup for alerts and notifications management too. I like to automate the infrastructure deployment with Terraform. The whole Hashicorp stack is a great addition to any DevOps setup. I'm also a big enthusiast of serverless, so I'm trying to automate different tasks using AWS Lambda.

And why these tools?

Most of them are open source and that's something we want to support 🙂. Also, I had the opportunity to work with several projects and try different tools, and I'm always trying to use the best ones. ELK stack has a great integration with all types of log sources, and you can stream those logs into Kibana in a consistent format. Grafana is a superb tool to visualize your infrastructure behavior and it's really easy to customize. Finally, I prefer AWS services rather than GCP or MA, since AWS is more flexible and consistent.

I see. And in general, what question does a company need to ask when choosing DevOps tools?

"What do we want to achieve?" As simple as it sounds, companies should take a second to think about what they want to take care of with DevOps tools. Sometimes, you don't need a whole ELK stack to check system logs, or a Grafana server to check uptime from your applications. If that's the case, my advice is to keep it simple; try to avoid expending too much time on simple tasks.

Otherwise, "do we want to use this tool for a long term?" That's important too. Maybe we are talking about a small company and they don't want to spend money or time into DevOps tools right now, but they should consider that the project can get bigger or the application eventually would get more traffic, and it's always nice to have a good monitoring tool, or a fully automated CI pipeline.

Finally, "is this tool going to work in our infrastructure?" Choosing between tools can be tricky, and sometimes you can achieve the same goal with different setups. One of the most important things you should think about is the integration of that tool with your architecture, sometimes you'll find yourself expending extra efforts trying to make that tool work, and that is something you want to avoid.

Lucas' DevOps Stack

Docker

Docker is considered to be the number one containerization platform since its launch in 2013. It allows developers to isolate applications into separate containers, so they're more portable and more secure. You can package your app, including all its dependencies, and deploy it in a different environment if you so wish.

In Stack Overflow's Developer Survey, Docker was cited as the third-most popular platform in use, the second-most-loved, and the most wanted. On our blog, we've written before on how you can keep your machine clean with Docker.

Amazon Web Services (AWS)

AWS provides on-demand, reliable, and scalable cloud computing platforms to individuals and companies. It's considered the pioneer in cloud computing and has a whopping market share of around 34%. Azure, Microsoft's cloud offering, is a distant runner-up at 15% market share.

AWS CodeBuild is a fully managed continuous integration service that compiles source code, runs tests, and produces software packages that are ready to deploy.

AWS CodePipeline is a fully managed continuous delivery service that helps you automate your release pipelines for fast and reliable application and infrastructure updates.

AWS Lambda is a computing service that runs code in response to events and automatically manages the computing resources required by that code.

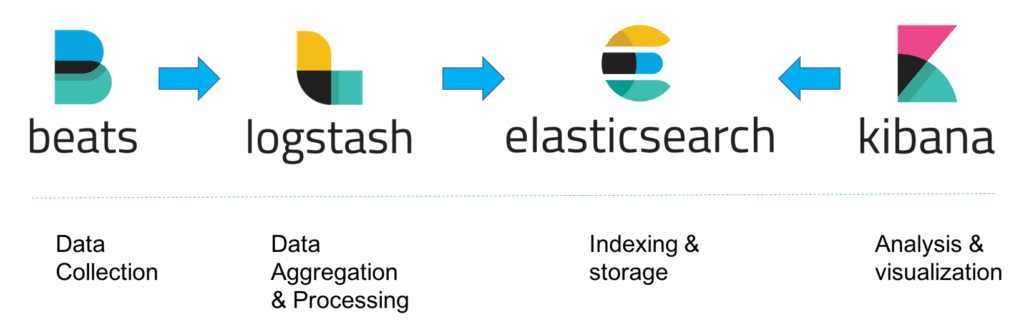

The Elk Stack is the acronym for three open-source projects: Elasticsearch, Logstash, and Kibana. Elasticsearch is a search and analytics engine. Logstash is a server‑side data processing pipeline that ingests data from multiple sources simultaneously, transforms it, and then sends it to a "stash" like Elasticsearch. Kibana lets users visualize data with charts and graphs in Elasticsearch.

Grafana allows for data visualization & Monitoring with support for Graphite, InfluxDB, Prometheus, Elasticsearch and many more databases. It has a customized query editor and specific syntax for each data source. Its dashboard and panels are quite versatile, meaning you can tailor them appropriately for specific projects.

Terraform is a tool for building, changing, and versioning infrastructure safely and efficiently. It can manage existing and popular service providers as well as custom in-house solutions. Terraform enables the concept of "infrastructure as code" , which is the process of managing and provisioning computer data centers through machine-readable definition files, rather than physical hardware configuration or interactive configuration tools.

Tell us about your experience with DevOps. What's your DevOps stack?

TABLE OF CONTENTS